How we built multiple screen sharing into Microsoft Teams calls

Mar 05, 2024 · 7 min read

How we built multiple screen sharing into Microsoft Teams calls

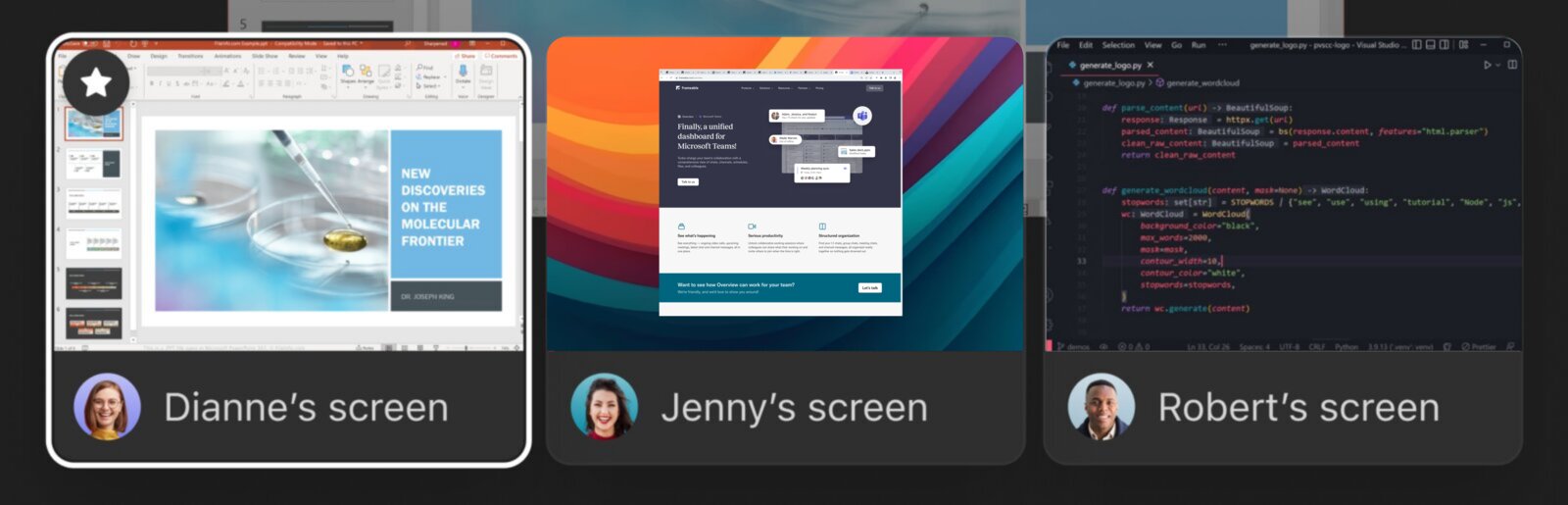

To some it sounds like a crazy prospect, but for those of us who work on seriously productive remote and hybrid teams, we know — it is completely essential for multiple people on a video call to be able to share their screen at the same time.

When crafting strategy, when building software, when troubleshooting, when training new hires, and in so many other situations, it allows for high-throughput, rich conversations when people can refer to the designs, source code, system logs, software interfaces and whatever else might be on their screen, without having to wait their turn or ask permission. For a presentation in a meeting, sure, one screenshare at a time is what you want, but for the way that modern teams work, sharing multiple screens at once is an absolute requirement.

However, if you are one of the 330 million Microsoft Teams users, you may know that Teams still doesn't offer this ability. So, we built the capability for our own team, and then released it as a product that you can try for yourself.

Can we actually bend Teams to our will?

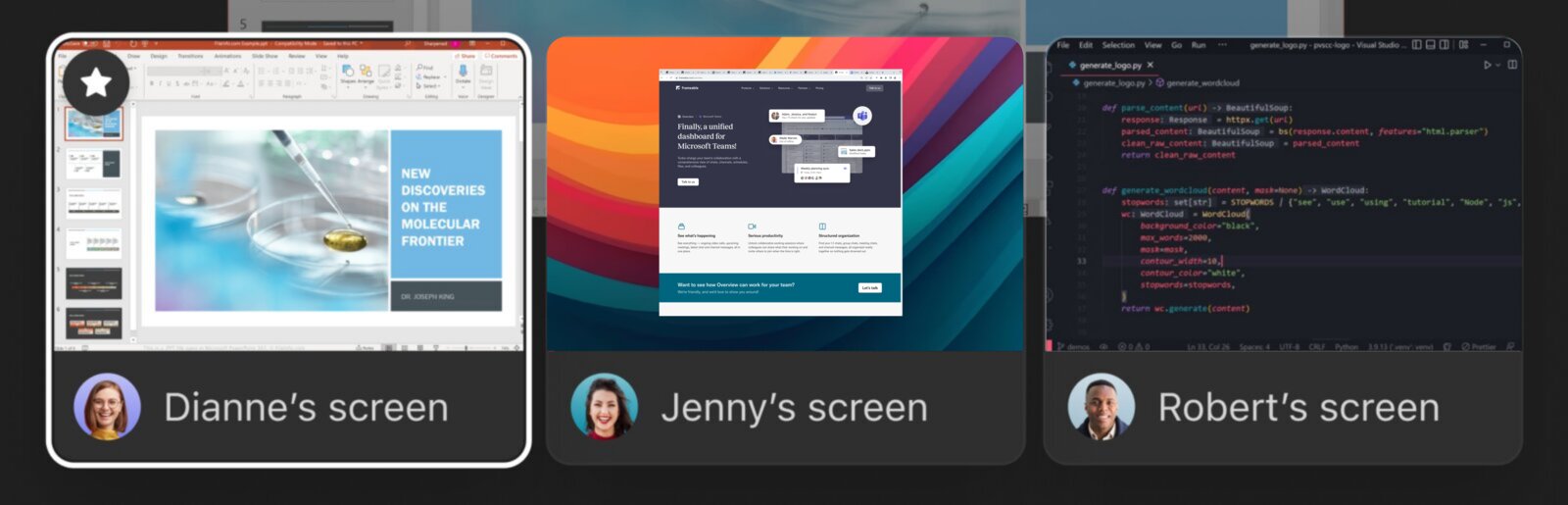

Microsoft does provide certain hooks and surface areas for app developers to work with, both outside and inside of Teams video calls. And so, we wondered, could we find a way to build this feature into existing Teams calls, just with the APIs available?

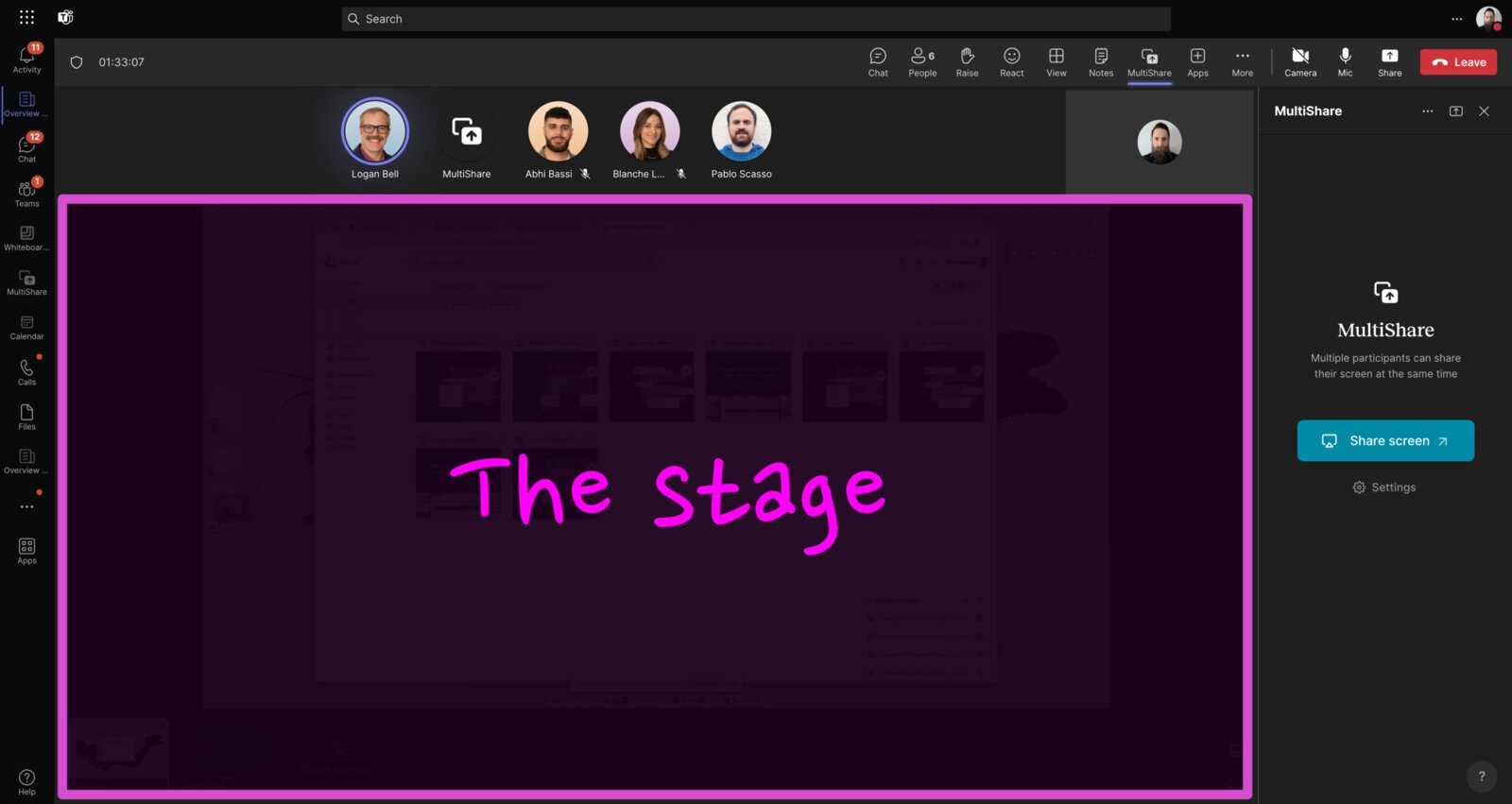

Teams calls have the concept of a "stage" where content can be featured. A spotlighted video stream, or a shared screen, or iframe content specified by an app which has been installed to the call. When a call participant calls shareAppContentToStage(), the app content takes over that space. Okay, so that's one ingredient — we can share content to the stage of a Teams call via an iframe that we host. Now how can we get screen video streams in there?

Getting the screen pixels

The standard way to get a screen stream is via getDisplayMedia(). This pops up a browser dialog which asks the user to choose a screen, window, or tab to share, and then returns a MediaStream. In our case though, there's a problem: our iframe is sandboxed by its host, and the display-capture permission is not explicitly allowed. So, what can we do? If we could somehow run this from an origin that we control, we would be in business.

Well, we found that window.open() would get us what we needed, albeit with some baggage. With this method we can be running in our own origin, so we're not constrained by any sandboxing. As far as downsides, the UX is sub-optimal, and we also have to handle logistics of communicating across contexts. But this works as another important ingredient for our purposes. Once we launch a window through this method, we can now run getDisplayMedia() and get our pixel stream!

Once we get our pixels from the popout, we can display them for everyone else on the stage, no problem.

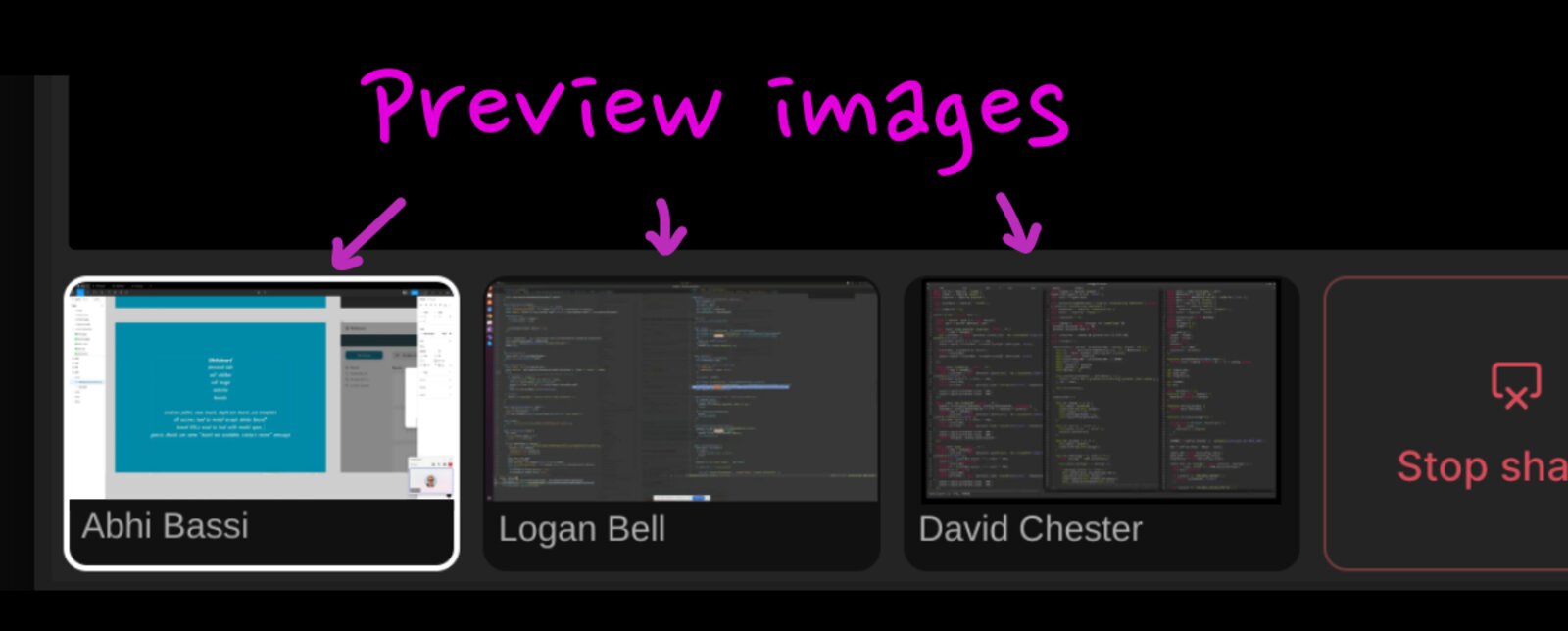

Preview images

Time to share these streams. First, we want to have ultra low-latency previews available so that people in the call can see what streams are active before fully loading them.

In order to accomplish that, each sender generates thumbnails on an interval, like this:

1234567891011121314151617181920212223242526 async function generateScreenThumb({ mediaStream }) { // attach our stream to a video element const videoEl = document.createElement('video'); videoEl.srcObject = mediaStream; await videoEl.play(); // scale our stream preserving aspect ratio const targetWidth = 1600; const targetHeight = 900; const scale = Math.min(targetWidth / videoEl.videoWidth, targetHeight / videoEl.videoHeight); // webp wants our dimensions to be even numbers! const thumbWidth = scale * videoEl.videoWidth >> 1 << 1; const thumbHeight = scale * videoEl.videoHeight >> 1 << 1; // prepare our canvas const canvasEl = document.createElement('canvas'); const canvasCtx = canvasEl.getContext('2d'); canvasEl.width = thumbWidth; canvasEl.height = thumbHeight; // finally, put the frame on the canvas and then export as webp canvasCtx.drawImage(videoEl, 0, 0, thumbWidth, thumbHeight); return canvasEl.toDataURL("image/webp", 0.5);}

We tried a range of sizes, quality settings, and encoding formats, and found that 1600x900 in webp format with a quality of 0.5 gave us the best balance of image quality, low cpu utilization, and decent bandwidth.

On-demand streaming

That gets us preview images, but of course we want full video streams too. For that, we use Azure Communications Services (ACS), which is the same WebRTC infrastructure that Teams calls use themselves. We create an ACS room, and then get viewer tokens for everyone in the call. We learned the hard way both that ACS doesn't support concurrent PATCH requests to create viewer tokens; and when those failures happen the ACS client library provides no mechanism for knowing about the failure! Under the covers, we receive an HTTP error response like the following, but the client just happily sails through...

123 HTTP/1.1 409 Conflict {"error": "The room has been modified since the last request."}

Okay, so then we create them serially, and make our own calls directly to the REST API so we can deal appropriately when things go wrong upstream.

When a user clicks to view a given screenshare, they connect to the ACS call and eventually the bytes start flowing. The process takes quite a while though — anywhere from two to ten seconds before the stream connection is established and active. So during that time while the stream is loading, we show the preview image, which is at most five seconds old.

All together now

There's even more to it — authentication and permissions grants via OAuth, managing ownership of the Teams stage, sharing URLs to the stage, sharing multiple monitors, differentiated access for facilitators, etc. But we've covered the basics, and we'll leave the rest for later. In the meantime, check out MultiShare on AppSource. We'd love to hear any feedback you have!

Thanks for reading...

We make truly awesome collaboration tools for Microsoft Teams, and we'd love to show you around.